Artificial intelligence is revolutionizing the way wars are conducted as advances in (AI) technology lead to the development of autonomous weapons systems, other military applications, and unmanned surveillance aircraft, as we see in the current conflict between Israel and Palestine.

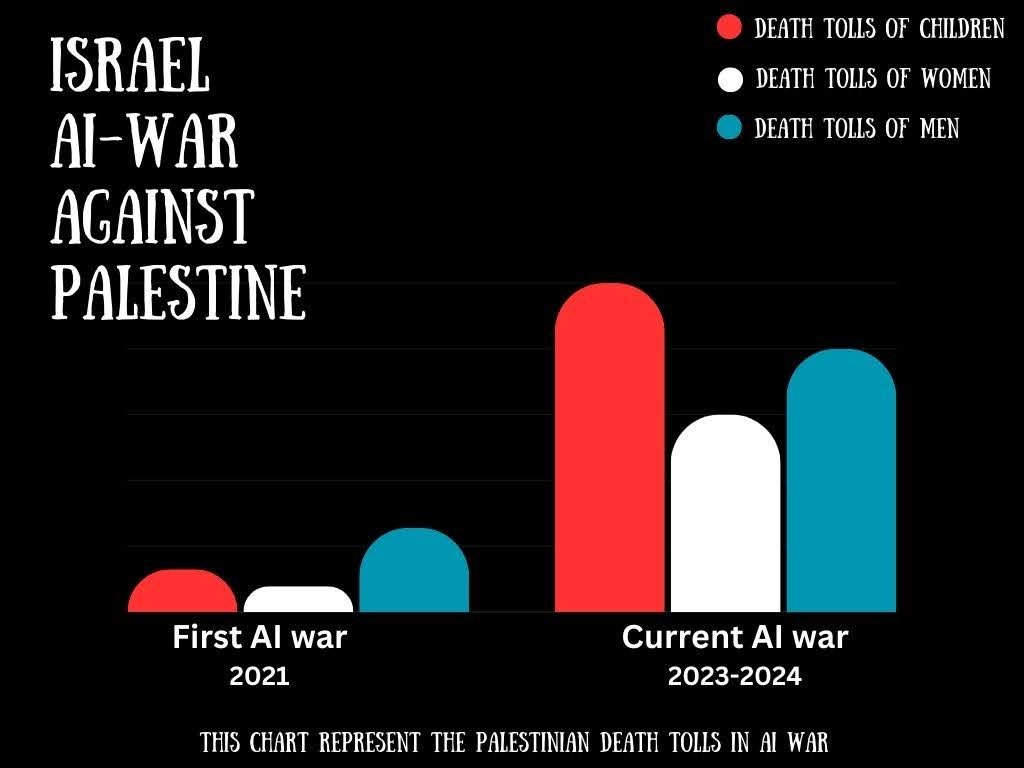

In May 2021, a key game-changer in the warfare between Israel and Palestine occurred through the operation of “ Guardians of the Wall” resulting in hundreds of casualties in just 11 days. The initial AI war, during the 11-day conflict between Israel and Gaza, expedited the assessment of intelligence regarding the Palestinian Resistance. In the 2021 AI war, the IDF collected data from signal intelligence (SIGNET), human intelligence (HUMAN), visual intelligence (VISIT), and geographical intelligence (GENT) then fed it all into systems to create data for conducting precision strikes.

This photo was generated by the Canva AI tool

“Alchemist, Gospel, and Depth of Wisdom”- Israel AI programs

In the First AI war, the IDF used three new programs: “Alchemist,” “Gospel,” and “Depth of Wisdom,” each with a specific role. “The Alchemist” system provides real-time alerts to unit commanders regarding potential ground hazards, which are sent directly to their mobile tablets. “Habsora” or “The Gospel” in English is a system used to enable automated tools to generate targets quickly and enhance precise and high-quality intelligence material as needed. Finally, “depth of Wisdom” was used to map Gaza’s tunnel network. This advanced analytical tool offers a comprehensive view of the network that includes both above- and below-ground details such as tunnel depth, thickness, and route characteristics.

Because of the use of artificial intelligence in this conflict, at least 230 individuals lost their lives, including 65 children and 39 women, with 1,910 sustaining injuries 90 of which were deemed dreadful. The Palestinian Ministry of Health reported that among the injured were 560 children, 380 women, and 91 elderly individuals, all within 11 days. The initial material losses in Gaza totalled tens of millions of dollars. According to UNRWA, Israel attacks led to the displacement of nearly 75,000 Palestinians, with 28,700 seeking refuge in UNRWA-affiliated schools.

In this current conflict, Israel is thought to be using its AI at a level unprecedented before.

Israel’s current military operation in Gaza was initiated in response to Hamas’ attack on October 7, which resulted in approximately 1,200 casualties and over 200 Israeli hostages till the date this article was written. The Israeli military has implemented an AI-powered system that has been used in the first AI war which is called “Gospel” to identify targets based on recent intelligence. This system was developed to provide targeting recommendations to human analysts, who then decide whether to transmit them to troops on the ground. The technology and logistics division’s website indicates that targeting units can relay these targets to the Air Force, Navy, and ground forces through an application named “Pillar of Fire,” whose commanders can access military-issued smartphones and other devices.

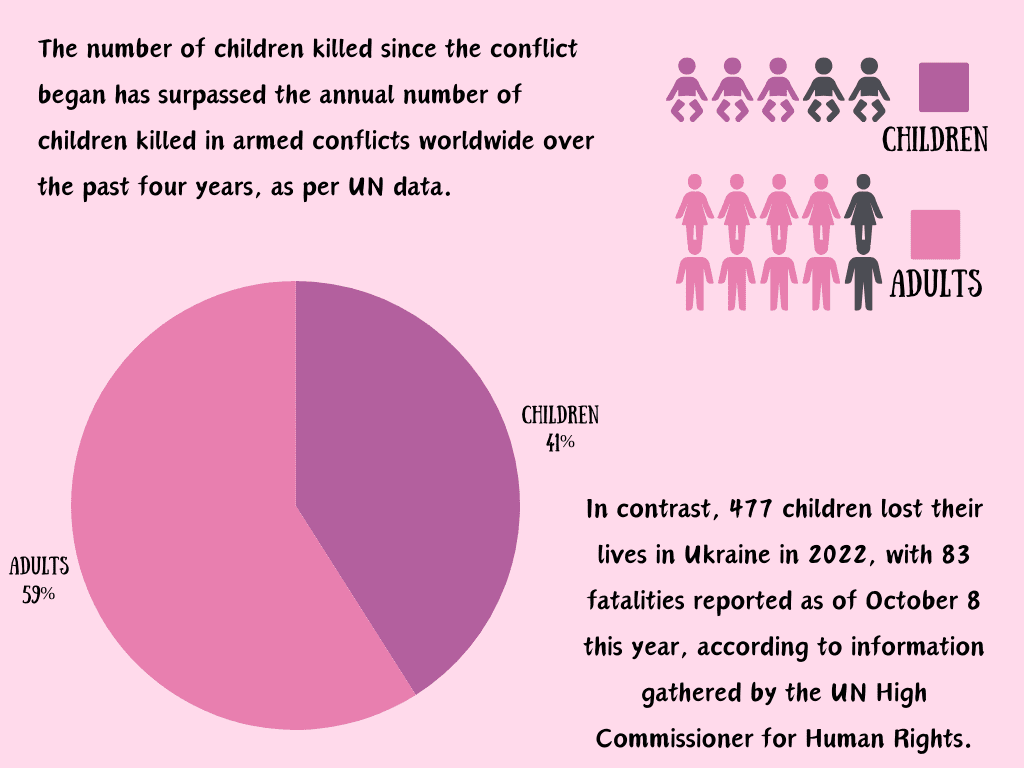

During this ongoing conflict, the “Gospel” system was used to select targets, including schools, aid organization offices, places of worship, and medical facilities. Over 30,000 fatalities have been reported in Gaza over more than five months of fighting, with 72,760 individuals sustaining injuries since the conflict began following the Hamas attack on Israel on October 7.

source: The Palestinian Ministry of Health in Ramallah

According to the Washington Post, the ongoing conflict has resulted in Israel’s government spending approximately 18 billion, equivalent to 220 million per day. Furthermore, Israel could potentially face a total cost of up to $50 billion, representing 10% of the country’s GDP, in a war lasting five to ten additional months as stated by the financial newspaper Calcalist.

Israel’s economy is suffering because of over four months of conflict with Hamas, particularly impacting AI startups and companies. In response, Google revealed in January its plan to allocate 8 million to aid Israeli tech firms and Palestinian businesses. Google specified that 4 million would be directed toward supporting AI startups in Israel, while the remaining $4 million would be allocated to assist early-stage Palestinian startups and businesses in maintaining their operations.

In conflicts such as those in Gaza, where civilians frequently become casualties, AI use in warfare must be strictly controlled to ensure adherence to international humanitarian law and human rights standards. Moreover, the utilization of artificial intelligence in military operations presents ethical and legal challenges, particularly concerning the creation and deployment of autonomous weapon systems. Once activated, these systems can function independently, raising apprehensions about their impact.

How ethical are AI-automated weapons?

Transformative technologies such as AI and machine learning ( ML) have fundamentally altered human interaction and will impact the future of warfare.

The ethical issue arises from the use of AI/ML to weaponize transformative technology to maintain military superiority and advantage on the battlefield. The specific ethical dilemma lies in the potential deployment of fully autonomous lethal weapon systems that operate without human control. While the development of lethal autonomous weapon systems reduces risks to soldiers in ground combat, it also highlights the ethical dilemma of these systems in making decisions or taking actions based on AI-enabled data without human intervention. Ultimately, addressing ethically challenging issues requires thorough examination using the ethical processing model.

AI generated a wave of fake news in the current Israeli-Hamas conflict

The technology behind fake news is known as generative AI, which is capable of generating new data types by using machine learning on vast amounts of training data. This data can be in the form of text (such as OpenAI’s ChatGPT Meta’s LLaMa, or Google’s Bard ), audio (such as Microsoft’s VALL-E), images (like OpenAI’s DALL-E or Stable Diffusion), the output produced by these systems can be remarkably fast and easy for most users, and depending on the instructions, it can be sophisticated enough for humans to perceive it as indistinguishable from content created by humans.

The relationship between artificial intelligence and fake news is complex and profound. AI can be used to generate, disseminate, and identify fake news and combat the dissemination of misinformation. The surge of AI-generated misinformation aligns with a persistent decrease in trust worldwide in government and media sources of information. As AI tools have rapidly advanced since Russia’s 2022 invasion of Ukraine, many observers anticipated a more significant role for them in the Israel-Hamas conflict. Throughout the current Israel-Hamas conflict, generative AI has been used to create deep fake images, videos, and articles that have been widely shared. One notable example is the viral spread of a fake story on social media & traditional media about “the 40 babies beheaded” in a Hamas attack.

“Specifically about the beheaded babies report, we cannot confirm the amount and specific place and everything like that. There have been so many horrible situations and we don’t have time, and we’re currently busy fighting and defending our country. We don’t have the time to check every report.” – The IDF spokesperson

Fake news stories such as this one are particularly impactful when they evoke strong emotions. Two groups of people are using artificial intelligence to disseminate misinformation in the current Israeli-Hamas conflict. One group creates images depicting human suffering, while the other produces AI-generated fabrications that aim to garner support for Israel, Hamas, or the Palestinians by appealing to patriotic sentiments.

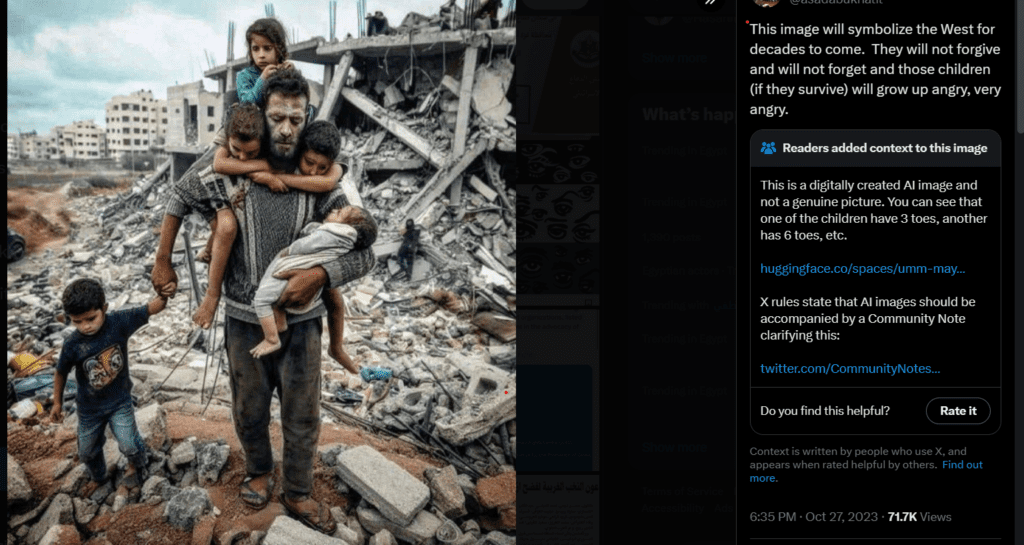

In this example, the first group created images depicting human suffering in Palestine.

Source: X

Various errors and inconsistencies indicated that it was generated by an AI. The man’s right shoulder is unusually high, and the two limbs underneath appear to be growing from his sweater. Another noticeable detail is how the hands of the two boys embracing their father’s neck merge. Additionally, there are discrepancies in the number of fingers and toes in some of the hands and feet in the image.

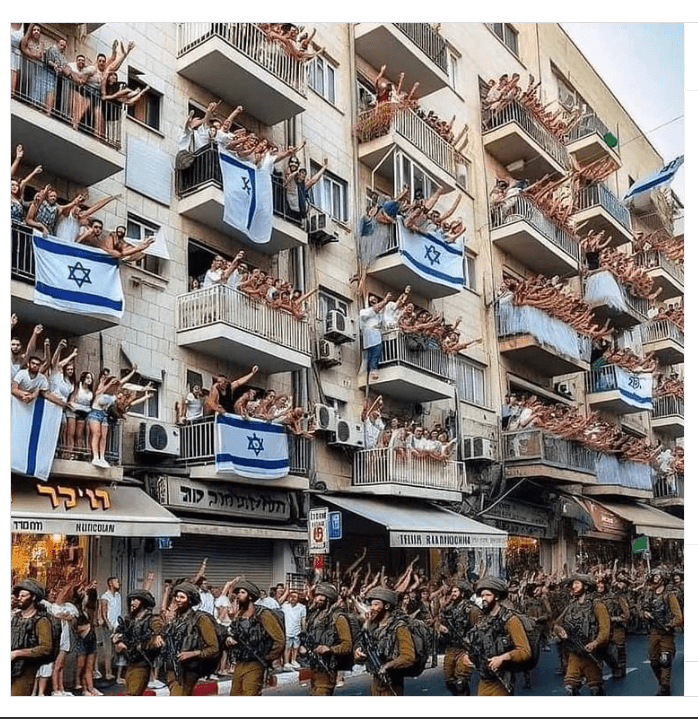

The second group generated AI fabrication that aims to gain support for Israel.

Source: LivefromIsrael Instagram page

In this image, one of the soldier’s shoulders extends unnaturally, with an appearance of more arms than expected per person. Additionally, a disfigured face is visible in the background, and an older woman seems to lack a lower body. Some soldiers depicted in this image also exhibit disfigured faces. Another image shows individuals with peculiar facial features, hands, and fingers extending beyond normal proportions.

Generative AI poses a significant threat in the public sphere as it can aid in creating misinformation that is more convincing than current methods. Even if generative AI succeeds in enhancing the overall allure of misinformation, most individuals are not regularly or only minimally exposed to false information. Moreover, generative AI can enhance the quality of trustworthy news sources by assisting journalists in various areas.

How is social media dealing with fake AI content during the conflict?

In the ongoing conflict between Israel and Palestine, Meta’s team have implemented a series of measures to tackle the increase in harmful and potentially harmful content circulating on their platforms. The policies aim to ensure the safety of users on apps while upholding freedom of expression for all. They enforce these policies uniformly worldwide, and there is no truth to the claim that they are intentionally stifling voices. However, content that includes praise for Hamas, identified by Meta as a Dangerous Organization, or violent and graphic material, is prohibited on their platforms. They acknowledge that mistakes can occur, so they provide an appeals process for individuals to challenge their decisions if they believe they have erred, allowing them to review the situation.

For X platform, previously known as Twitter, has been deleting recently created Hamas-affiliated accounts and collaborating with other tech firms to stop the spread of “terrorist content” online. They are also actively monitoring antisemitic speech as part of their overall efforts. Additionally, they have removed numerous accounts trying to manipulate trending topics. For the AI-generated images and manipulated videos, X introduced a new feature called “Notes on Media.” This feature enables users to report when they suspect that the media is misleading or fake content. These tagging notes will be visible on all posts identified by the X system as containing the same image.

Source: X platform

The rise of AI-deep fake news may lead to “false memories”

DeepFakes are manipulated media produced using artificial intelligence technology. Although computer-generated imagery is not a recent technical advancement, the emergence of deepfakes and the creation of deepfake apps offer an exceptionally realistic outcome without requiring extensive training or costly equipment. Years of psychological studies have proven that our recollections of past experiences can be altered by subsequent information (known as “the misinformation effect”).

Deepfake technology has proven itself to be a troublingly effective method of spreading misinformation, but a recent study suggests that the impacts of generative AI programs can be more complex than originally feared. A study published in (July 2023) in PLOS One found that deepfake videos can distort a viewer’s recollection of the past and their understanding of events.

In the era of generative AI, algorithms can help you detect the truth

Detecting fake news is an intricate process that begins with awareness and education. It is essential to verify the source. Reliable information is usually fact-checked or peer-reviewed. Today, a huge number of individuals depend on the Internet as their primary source of information. Nevertheless, because of the abundance of misinformation on this platform, it is crucial to critically examine and assess everything we learn from online sources.

Due to the increase in the use of generative AI tools in all social media platforms, Meta decided to collaborate with other firms to establish universal guidelines for recognizing AI content, as seen in initiatives such as the Partnership on AI (PAI). Photorealistic images produced with Meta AI are now clearly marked upon release to inform viewers that they were “Imagined with AI.”

Furthermore, AI can increase the problem of fake news by creating and disseminating disinformation, it also can solve it through detection, filtering, and countermeasures. Users can employ AI algorithms to detect fake news by examining patterns, language usage, and sources. Natural language processing (NLP) approaches can identify questionable information by analyzing linguistic clues, moods, and inconsistencies.

Finally, AI-powered algorithms used by social media platforms and news aggregators are critical for recommending information to users. If these algorithms favor engagement indicators such as clicks, likes, and shares over content accuracy, they may unintentionally encourage fake news that garners greater attention. Having said that, AI can be trained to prioritize accuracy and reliability in content recommendations, thereby minimizing the spread of disinformation. The effectiveness of AI in combating fake news relies on the responsible deployment of these technologies and the collaborative efforts of stakeholders across various sectors, including technology, media organisations, policymakers, and civil society.

Times For Palestine

Open Source Investigation

Aya El-Koumy

Sources:

Adobe Is Selling AI-Generated Images of Violence in Gaza and Israel (vice.com)

WhatsApp’s AI shows gun-wielding children when prompted with ‘Palestine’ | WhatsApp | The Guardian

Fake news can lead to false memories – Neuroscience News

‘The Gospel’: how Israel uses AI to select bombing targets in Gaza | Israel | The Guardian

Israel’s operation against Hamas was the world’s first AI war – The Jerusalem Post (jpost.com)